By now, you’ve undoubtedly heard about virtual production and it’s ever-growing presence in modern productions. It’s a revolutionary set of technologies which marries the pre-, principal, and post-production processes — and blurs the line between the digital and physical.

So, with that, let’s explore the virtual production workflow; what is it, what are its steps, and how does it benefit filmmakers and video professionals? Read on to find out more.

Table of Contents

Large File Transfer for Video Teams

MASV is the best tool to move camera files, transcoded media, and 3D assets.

What is Virtual Production?

Virtual production utilizes 3D rendering technology, often in the form of a game engine like Unreal, to construct photorealistic sets that are displayed on large LED screens. The virtual set adjusts in real-time to match the perspective of different camera angles and movements, while a camera captures an actor performing in front of the screens.

Virtual Production vs. Traditional Production

“Traditional” production is much like an assembly-line; a process that involves each entity or department on a linear schedule. Because of the tight timelines involved, iterating or reshooting scenes is time-consuming and prohibitively expensive. Filmmakers often shoot scenes without a definite idea of how those scenes will ultimately look.

Virtual production is different. It’s a non-linear, more collaborative, and more iterative method of making video. It combines computer-aided production and real-time visualization tools such as game-engine technology, computer-generated imagery (CGI), virtual reality, and augmented reality to provide a “creative feedback loop in real-time.”

This can save production teams hours and allows for more certainty around look and feel during the pre-production and production phases.

There are several different types of virtual production:

- Visualization: Prototype imagery to show the intent of a shot.

- Performance capture: Recording the movements of actors or objects.

- Hybrid virtual production: Using camera tracking technology and green screen.

- Live LED wall, in-camera virtual production.

Virtual production can provide visuals and effects that simply weren’t possible just a few years ago.

Fittingly, many virtual production studios are called LED volume stages — and they need a ton of space to operate. One such stage is MELs Studio in Montreal, which is home to LED screens 20 feet high and 65 feet in diameter.

At its core, virtual production marries the physical and virtual worlds to create immersive sets for video production. The physical world, in this case, consists of props and actors while the virtual world includes backgrounds, virtual lighting, and visual effects.

It’s often used for film and television, video games, and even commercial productions from outlets such as The Weather Channel.

The technique has proven immensely popular since The Mandalorian made headlines as the first major virtual production in 2018, with more than 300 virtual stages now in existence (up from just three in 2019).

Research and Markets, a research firm, estimates the global virtual production market will grow at a 17.8 percent compound annual growth rate (CAGR) between 2022 and 2030.

However, one of the most revolutionary aspects of virtual production isn’t the existence of LED volumes, real-time rendering, or live camera tracking. Rather, it’s that visual effects are applied to scenes throughout the production process, including pre-production. And that high-quality effects and imagery are used from the project’s outset.

Filming is more or less camera-ready by the time shooting begins and iterations can take place at any time, providing more certainty and flexibility for filmmakers accustomed to compromising on quality and having to work with visual effects placeholders.

And visual effects and other assets are produced more collaboratively, instead of in siloes, allowing for cross-compatibility among different teams.

Indeed, thanks to virtual production, the old filmmakers’ mantra of “fix it in post” has become “fix it in pre.”

💡 Learn More: How MASV Moves Uncompressed 6K Video for LED Stage Shows

Large File Transfer and Media Ingest

Share media assets between your pre- and post-production teams with MASV.

Jump to Section

Workflow | Tools & Software | Benefits | Challenges | Tips | Delivery

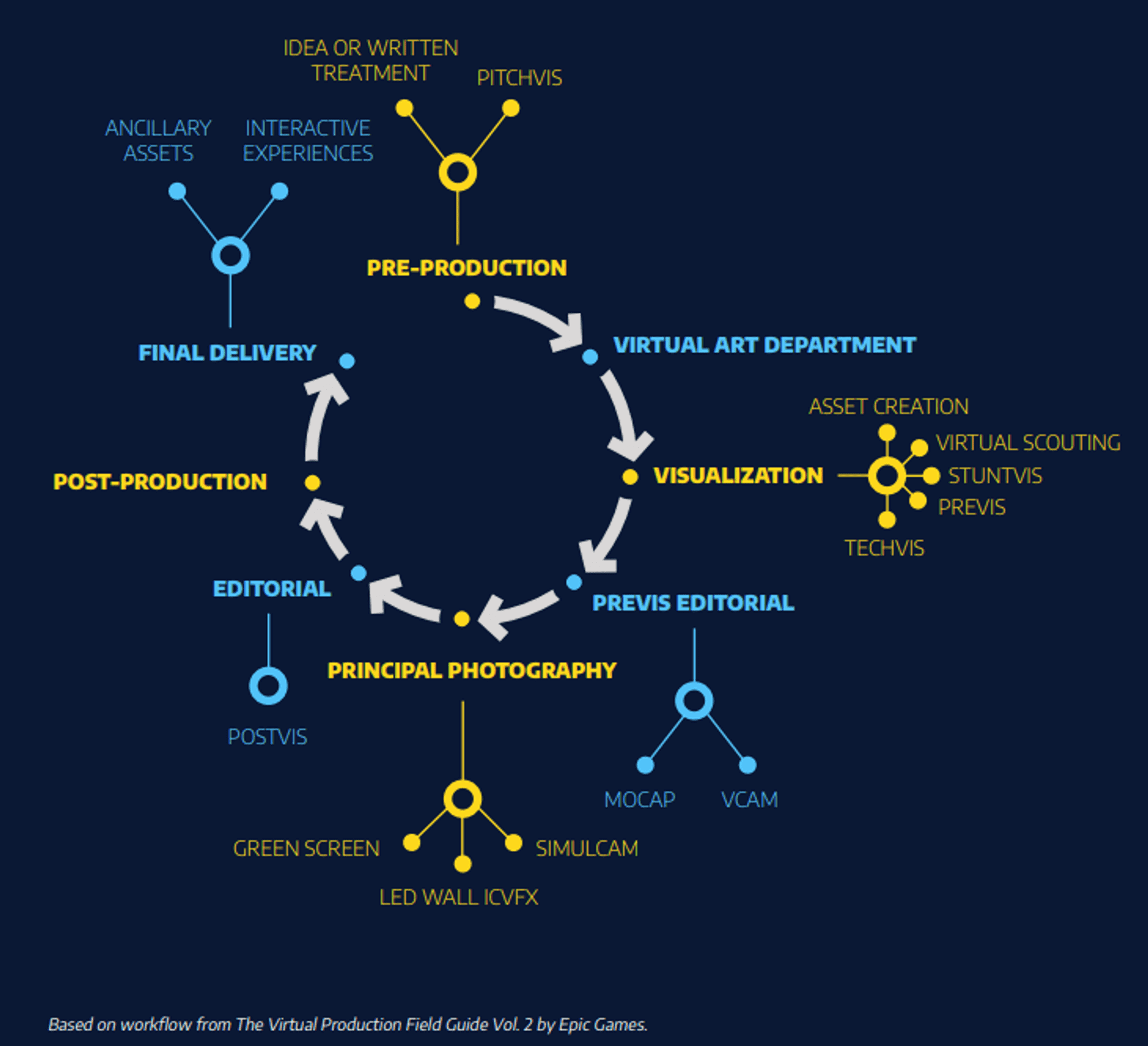

What is the Virtual Production Workflow?

The virtual production workflow is a set of steps that breaks down the virtual production process into a series of manageable tasks. It encompasses all three stages of video production: Pre-production, production, and post-production.

Here’s a snapshot of the virtual production workflow:

Source: Unreal Engine

The steps of a virtual production workflow include (but aren’t limited to):

- World capture (including virtual scouting, location scanning, and digitization)

- Visualization

- Performance and motion capture

- Simulcam

- In-camera visual effects (known as ICVFX).

While every project is slightly different, and each of the steps vary in terms of timing, the following phases typically define the virtual production pipeline.

Note: Although the virtual production pipeline isn’t as linear as a traditional production, and iterations can occur at any stage, the traditional project phases of pre-production, production, and post-production are still relevant.

Pre-Production

Pre-production in virtual production shares commonalities with traditional filmmaking, as it’s where the production is first visualized for approval.

The pre-production stage takes on outsized importance in the virtual production environment, however, because it’s where most visual effects (VFX) are born and begin to take shape.

Adding and iterating on ICVFX elements during pre-production and production can facilitate tighter integration of live-action and computer-generated content while reducing post-production time.

That can make a big difference in the overall time spent on a project. “Every hour of pre-production is worth two hours of production,” says Zach Alexander, founder of virtual production company Lux Machina.

What is the VFX Pipeline?

Read our guide on the different stages of a VFX pipeline.

Pitchvis

During this stage, 3D artists create a digital pitchvis to summarize various scenes within the project — a little bit like a high-tech storyboard but produced more like a movie trailer.

Pitchvis in virtual production relies on game engine technology. The high quality of assets developed within game engine platforms means they can be repurposed throughout the project lifecycle.

Virtual Art Department (VAD)

It’s in pre-production, as well, that two novel entities come into play: The virtual production supervisor and the virtual art department (VAD).

- The VAD designs, creates, and manages all the project’s visuals, including the production of 3D prototypes and camera-ready props and environments. They also decide which elements should appear virtually and which should be physical objects. The VAD typically works closely with the previs team to ensure a seamless transition from idea to iteration.

- The virtual production supervisor is something of a line producer in a virtual production environment. They liaise between the brain bar (the team that manages the technical aspects of virtual shooting), the VFX department, the VAD, and the physical production team.

Visualization

This phase includes asset creation, when artists use game engines, 3D modeling software such as Maya, and motion-capture (mocap) or virtual cameras to produce digital imagery for the props and characters that make the project’s virtual world.

Source: Before and Afters

3D artists use virtual rapid prototyping (VRP) techniques to quickly create and edit sequences or photogrammetry to create lifelike 3D models from photographs. Models are continually refined to ensure they’re camera-ready by the time production begins.

The visualization phase also includes virtual scouting, which uses virtual cameras and digital props to create digital locations based on proposed sets.

Other elements of virtual production’s pre-production process include:

- Previs: Like a traditional project, the previsualization stage allows for experimentation with lighting, camera angles and movements, and other elements.

- Unlike a traditional project, previs in virtual production is a highly collaborative effort involving multiple stakeholders, including the cinematographer, director, and video editor.

- Additionally, because of ongoing increases in image quality and computer performance, sequences and shots designed in previs are not far off the final-pixel image quality. For these and other reasons, previs is often dubbed “the beginning of production” in a virtual production workflow.

- Techvis: Techvis helps VFX artists and others plan specific shots by using camera data and other information to determine the right camera lenses, placements, and movements via motion control. This planning allows directors to control camera movements in very precise and perfectly repeatable increments.

- Stuntvis: Uses game physics to plan and test upcoming physical stunts and finesse details around weapon development, choreography, and other set design elements.

Production (Principal Photography)

Like a traditional project, the production phase (also known as principal photography) is where cameras begin rolling and the film or video takes shape.

In virtual production, it’s also where all those 3D assets and virtual worlds created by the VAD get projected on the LED volumes surrounding actors and (sometimes 3D printed) physical props on virtual production stages.

Source: Unreal Engine

Ambient lighting from the LED walls often replaces traditional lighting on the foreground stage, while virtual lighting is deployed within the virtual world.

Physical cameras equipped with camera-tracking technology are synced with virtual cameras in the game engine (a process known as Simulcam), allowing the virtual scene to move perfectly with the physical cameras.

Because practically everything in virtual production happens in real-time, 3D artists can adjust virtual worlds to improve lighting, camera angles, or even refine digital assets.

While also used in previs, it’s during the production phase that real-time performance capture (or motion capture) to record actors’ movements takes place in earnest. Simulcam is used to superimpose actors clad in mocap suits onto virtual sets in real-time, allowing virtual scenes to be directed just like physical scenes.

Green screens are also sometimes used at this stage. Indeed, while LED volumes have gotten the lion’s share of recent press, green screens are still used within virtual production environments for live compositing and to make small fixes to shots unable to be finalized in-camera.

Post-Production

Post-production takes far less time in virtual production than during a traditional project because most VFX and even color grading happen during the pre-production and production phases.

VFX

That doesn’t mean there’s nothing for VFX artists to do once shooting wraps, however. As in a traditional project, virtual production video editors often add placeholders to scenes that VFX artists must clean up and finalize (by adding effects or 3D models) in post.

💡 Learn More: How to Share 4K 360° Plates with VFX Teams

Postvis

Postvis, the final form of visualization, takes place after filming and integrates live-action footage with any rough VFX. Postvis helps post-production VFX teams with the timing and staging of various shots and assists the editorial team in combining all the elements — including live action, previs, and virtual production footage — to create a final cut.

File Transfer for Virtual Production

Share 8-12K Footage for Display on LED Walls

Jump to Section

Workflow | Tools & Software | Benefits | Challenges | Tips | Delivery

Virtual Production Tools and Software

Virtual production wouldn’t be possible without cutting-edge technology, from end-to-end virtual production software such as ILM StageCraft to one-off tools. Here’s a taste of some of the tools and software virtual production crews use to produce the truly mind-blowing visuals to which we’ve all grown accustomed.

LED walls

Most projects rent a soundstage kitted out with walls made up of interlocking LED panels. These panels are combined into a very large screen and powered by video processing hardware and software. But you can also build an LED volume from scratch or ask one of the many virtual production providers — such as MELs, Line 204, Nant Studios, or Dark Bay — to customize one just for you.

Source: Newsshooter

Game engines:

These 3D engines create virtual sets and worlds that can be explored and traversed like a video game. The most popular game engine, Unreal Engine 5, has been used on productions from The Mandalorian to Westworld. Other game engines used in virtual production include Unity, CryEngine, and Godot.

💡 Read More: Why This Top eSports League Depends on MASV

Powerful graphics processing units (GPUs)

The most powerful game engines in the world are virtually useless without powerful GPUs to run them. The better the GPUs in your computer, the more detail you can display on those massive LED volumes. It’s advisable to use a Windows PC for virtual production since many of the plugins you’ll need, including LED tracking, are only on Windows.

3D capture and animation tools

Photogrammetric tools such as RealityCapture use AI to create 3D models from photos or laser scans, while other 3D applications such as Houdini enable rich 3D animations to create effects using reflections and particles.

3D asset libraries

The existence of the Quixel Megascan Library, the self-styled world’s largest 3D scan library, means there’s no need to reinvent the wheel when you need 3D props, textures, or environments.

Motion tracking cameras

Precise camera tracking is essential in a virtual production environment. Popular motion tracking systems include OptiTrack and StarTracker.

Virtual reality headsets

VR headsets can be invaluable tools during the pre-production and virtual scouting process. Crew members can plan shots and start planning set builds by collectively exploring and interacting with 3D environments using headsets such as Somnium VR One and MeganeX.

The most popular virtual production technology, according to a recent survey? Motion capture technology, with more than half of respondents indicating they’d used it in the past 12 months.

Fast, Easy, and Secure Large File Transfer

Try MASV and discover a better way to move media files.

Jump to Section

Workflow | Tools & Software | Benefits | Challenges | Tips | Delivery

Virtual Production Benefits

Virtual production has many benefits; for one, it really came up during the socially-distanced pandemic, helping facilitate collaboration among far-flung team members.

But it has plenty of other advantages (some of which we’ve already mentioned). Here are a few of the main benefits of a virtual production pipeline:

1. Time and cost savings

- Virtual scouting using VR headsets and other technology cuts down dramatically on travel time and cost.

- Finalizing major creative decisions earlier in the process means crews are better prepared while actors are on set, making for a less onerous production.

- It’s also far easier to adjust content on the fly as filming takes place, rather than bringing actors back for costly reshoots or spending hours on post-production rendering.

- It lowers the cost of asset creation, as high-fidelity assets created early in the process can be used throughout the production cycle without having to be recreated at a higher resolution.

- It can also lower the cost and effort required for asset management and tracking, since game engines typically have very strong built-in asset tracking systems.

2. Environmentally-conscious

Virtual environments can reduce the ecological impact of traditional productions by creating digital sets and props instead of physical ones, which contribute to waste and pollution. This also reduces travel time and distance. For example, if an actor in Los Angeles needs to reshoot a scene in the desert, it is more environmentally friendly to drive to a volume stage in L.A. than to travel by plane and car to reach the filming location.

And because game engines were designed with “multiplayer” in mind, conducting remote or multi-user collaborations with stakeholders in different physical locations comes second nature to virtual production technology. Unreal Engine, for example, uses Perforce Helix Core technology to provide version control on projects with potentially hundreds of collaborators.

3. More refined creative ideas

Starting visuals and shot planning early in the process leads to more iterations and, ultimately, better visuals, environments, and shots. Virtual production offers more options to further refine creative ideas because of the ease in which real-time engines can iterate or customize shots and sequences.

Filmmakers can also lean on the real-time physics provided by game engines to design action sequences or camera movements in a sandbox where the real world’s laws of physics apply.

4. Improved natural lighting

We already mentioned the benefits of ambient LED screen lighting — green screens are notoriously finicky about the type of lighting states they work well with, meaning more time is required in post to adjust effects and lighting.

5. Real-time visibility

It’s much easier for the cast and crew to get an idea of how shots will look as they perform alongside the action on LED screens, as opposed to acting in front of a green screen and filling in backgrounds and effects later.

6. Environmental control

Crews have complete control over the time of day, lighting, weather, traffic, and other variables that can come into play when shooting on location. Switching scenes and in a virtual environment is much easier (and faster).

7. More immersive environments

Virtual environments created for virtual production can be explored as a 360-degree environment using extended reality technology.

File Transfer for Video Production

Share from set to edit bays, to coloring suites, recording studios, finishing houses and more.

Jump to Section

Workflow | Tools & Software | Benefits | Challenges | Tips | Delivery

Virtual Production Challenges

Virtual production isn’t without its challenges, either, of course.

1. Change in preparation

Its pre-loaded workflow can be a difficult adjustment for filmmakers used to post-loaded schedules and unaccustomed to the hours of planning and preparation required before shooting begins.

2. Talent gap

Finding staff with the skills required to work on a virtual production project is also challenging thanks to their technical complexity. TV Technology notes that adjacent industries such as VR animation, architecture, and automotive design have become talent sources for the industry thanks to the skill requirements of those industries.

Indeed, industry initiatives such as Epic Games’ Unreal Fellowship, a blended learning experience meant to educate filmmakers on the nuances of virtual production, aim to disseminate virtual production skills and techniques among filmmakers and video pros.

Jump to Section

Workflow | Tools & Software | Benefits | Challenges | Tips | Delivery

Tips for Better Virtual Production

Like any workflow, there are right ways and wrong ways to go about a virtual production. Here are a few tips and best practices to keep in mind when starting your next project:

1. Invest in premium graphics hardware

As mentioned above, ensure you have a powerful GPU, such as a gaming PC with at least ten CPU cores and a GPU equivalent to the NVIDIA GeForce RTX 3090. A more robust setup, such as the NVIDIA RTX A6000 Graphics Card combined with the Quadro Sync II Kit, is recommended for the nodes connected to the LED panel’s video processors.

2. Scale back LED walls to save money

You don’t necessarily have to drop all the dough required to rent a full LED volume with enveloping ceilings and sidewalls. Sometimes, a single-wall LED is all you need. Just be aware that the type of wall you use will greatly impact the amount of ambient light emitted. Hemispherical domes, half-cube, and wall-with-wings setups are considered the best for emissive light, in that order.

3. Take advantage of programmable lighting

If you don’t have enough emissive light, the digital multiplex (DMX) protocol can be programmed to turn multicolor lights into effects lighting. Crews can program lighting specifications using DMX in the game engine or by using a lighting board.

4. Don’t forget color

Understanding color science, especially concerning your specific cameras and LED volume, is crucial to producing the most realistic-looking virtual environments and assets. Ignoring the finer points of colorimetry can lead to disastrous-looking visual artifacts in your final cut.

MASV and Virtual Production: A Perfect Match

Virtual production is highly productive for remote teams who collaborate on projects globally. Still, thanks to the level of detail shown on LED volumes, it requires extremely high-resolution files of up to 12K. That’s not always easy to accommodate in a remote collaboration.

Luckily, MASV is the perfect tool for transferring such weighty files quickly, easily, reliably, securely, and cost-effectively.

Start MASV for free today; you get 10 GB free every month. After that, it’s Pay As You Go per transfer for flexibility, or opt for low-cost subscriptions for significant savings. When you sign-up, you also get access to:

- Fast, stable file transfers — up to 15 TB per file.

- Upload Portals to receive large files from anyone.

- Easy integrations with popular cloud tools.

- Watch Folders to create transfer automations.

- Premium security controls and the backing of an ISO 27001 certification.

- And a File Transfer API to integrate with your custom delivery workflow.

MASV Large File Transfer

MASV is free to use; you get 10 GB free every month.

Frequently Asked Questions

What’s the difference between virtual production and traditional production?

The major difference between virtual and traditional production is that, in virtual production, interactive backgrounds and assets are displayed in real-time on massive LED volumes (as opposed to static backgrounds or green screens). Virtual production also requires much more work and preparation during pre-production to ensure 3D environments and assets are camera ready, compared to the post-heavy traditional production approach.

What are the key factors for considering virtual production?

Virtual production can be especially beneficial for effects-heavy projects that require artificial environments, and for budget-conscious projects that want to save time and money by foregoing in-person location scouting and shooting on location.

How are producers approaching budgets in virtual production?

Virtual production allows producers to develop project budgets with more clarity, because virtual production offers greater control over potentially costly wild-card elements such as the weather or location availability.

How is real-time performance capture used in virtual production workflow?

Real-time performance and motion capture is used both in the pre-production and production phases of a virtual production workflow. Performance capture is often used when visualizing environments and assets in pre-production, and during the principal photography stage when filming actors in motion-capture suits.